On Monday, I posted an article titled The AI Misinformation Epidemic. The article introduces a series of posts that will critically examine the various sources of misinformation underlying this AI hype cycle.

The post came about for the following reason: While I had contemplated the idea for weeks, I couldn’t choose which among the many factors to focus on and which to exclude. My solution was to break down the issue into several narrower posts. The AI Machine Learning Epidemic introduced the problem, sketched an outline for the series, and articulated some preliminary philosophical arguments.

To my surprise, it stirred up a frothy reaction. In a span of three days, the site received over 36,000 readers. To date, the article received 68 comments on the original post, 274 comments on hacker news, and 140 comments on machine learning subreddit.

To ensure that my post contributes as little novel misinformation as possible, I’d like to briefly address the response to the article and some common misconceptions shared by many comments.

Welcome to the Club

Regarding the role played by the popular media, one common (and reasonable) response was – hey, this happens everywhere. When you’re an expert, then you realize the press doesn’t know much.

In an unusually eloquent comment, a man going only by “Jim” wrote:

This is a problem that impacts every area of expertise. Journalists are, for the most part, generalists rather than specialized correspondents on a particular area. -Jim

I buy this argument but think it requires qualification. As a jazz musician, I often lamented the failure of the press to cover the substance of the music. The only jazz writing that hit the spot for me was that by pianist Ethan Iverson (see his Do the M@th blog) who happens to be an uncommonly gifted writer in addition to being a prolific pianist (as featured in the Bad Plus).

So, in short, I don’t feel sorry for us machine learning researchers who don’t always get accurate coverage. This is a common problem. And in fact, in the short run, many of us benefit. Salaries and startup valuations are at stunning levels and the incredible demand from students (much of it fueled by media attention) for master’s and PhD degrees in machine learning in turn has created demand for machine learning professors and lecturers. However…

The Potential for Harm

Putting aside sympathy for the machine learning community, there’s another more important concern. Unlike string theory, where a cartoonish depiction of research doesn’t change anyone’s life, machine learning actually is affecting people’s lives. We interact with it every day, and the problems are real, e.g. (i) discrimination in algorithmic decision making (ii) technical unemployment (iii) ceding control to mindless recommender systems that optimize only clicks (think Facebook’s fake news problem) (iv) autonomous weapons already under development raise serious concerns about the ethics of warfare. So machine learning will have both immediate and long-term societal impacts, and it actually is important, for democracy to function, that the public be better informed.

In my eyes, the present situation is reminiscent of the news’ failure to adequately cover the derivatives market prior to the financial collapse. How can we hold politicians accountable for reasonably regulating the financial markets if Michael Lewis is the only pundit who understands how the derivatives markets work and it takes him over a year to write the book that explains it for the masses? [TL/DR: cloying prose but clear explanations]

Religion and the SingularitY

Some readers were peeved that I referred to Kurzweil’s Singularity prophesying as religion. It’s true that in scientific communities, calling something religious can function as a Bogeyman argument. But I feel comfortable using the term here. Kurzweil has a conclusion from the outset. Man and technology will merge – the technology will explode doubly-exponentially (whatever that means), this will result in him living forever, and it will happen at a date that only he can deduce by some opaque means. This has undeniable hallmarks of religiosity.

- A holy figure / cult leader – Ray Kurzweil

- A nebulous prophesy – The Singularity, in its evangelized form, is defined such that a true believer can always claim that it has already happened and always claim that it hasn’t happened yet, and yet (of course) it is prophesied to happen at a precise date: recently revised to 2045, as gleefully reported by the Telegraph

- Unshakable axioms – Scientists fit beliefs to evidence. But Kurzweil’s Singularitarians cherry pick evidence that fits beliefs. See the strange conversation regarding doubly-exponential growth of “technology” in the original post’s comments. What precisely does technology mean here? This can shift over time (or even within a single graph), as long as something appears to fit this unshakable portion of the sacred text.

Some readers also pointed out that ‘singularity’ means many things as defined by many authors. Because the post addressed the misinformation epidemic and Kurzweil’s variety has a near monopoly on the public consciousness, I was speaking specifically as regards this flavor. Perhaps in a future post I could review the ideas of other, more critical thinkers on the topic more thoroughly.

Also, some readers took my dismissal of sensationalism and quasi-religiosity as a dismissals of either (i) AI safety, (ii) futurism, (iii) the dangers of unregulated development and deployment of machine learning.

To be clear, I make no such dismissal. Broadly defined, I believe that developing a rigorous study of AI safety is of fundamental importance. Already we live in a world with autonomous drones, autonomous automobiles, and one in which the military is developing autonomous weapons. Unchecked applications of machine learning have the potential to cause physical, financial, and social harm (see above).

Per Merriam Webster, a futurist is defined as:

one who studies and predicts the future especially on the basis of current trends.

In this sense, I would consider myself to be a futurist, although perhaps more focused on the near to intermediate term than the typical futurist. I have no problem with futurism, as long as one reasons clearly and logically.

Just an Outline

Some readers pointed out that the post was just an outline of works to come. Yes, this is true.

Diversity and Inequality in AI/ML

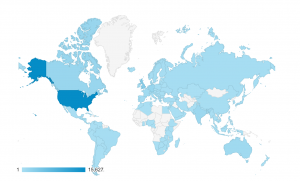

Finally, while witnessing the traffic bubble to Approximately Correct was admittedly gratifying, a disturbing trend turned up in the traffic stats. For the better part of a day or two this article was a top trending piece of machine learning news. In that time it received nearly 16,000 views from the US, 5,000 of which came from California. Traffic numbers from western European nations ranged from the hundreds to thousands each. However, the total view count from the entire African content was less than 300. Excluding South Africa, that total falls to less than 100. As we develop technology with the potential to displace entire industries, including the agriculture, mining and transportation industries that many developing nations depend on, we should ask who owns / should own this technology? And what will happen if we decimate the employment markets in countries that reap none of the benefits of such ownership?

[removed]

“Unshakable axioms – Scientists fit beliefs to evidence. But Kurzweils Singularitarians cherry pick evidence that fits beliefs. See the absurd conversation on doubly exponential growth of “technology” in the original post’s comments.”

I presented exactly zero beliefs, because I have none.

I shared some data and this single claim: it looks like accelerating change in important technologies might continue, this could be impactful and is therefore important. This was painfully clear.

What evidence do you have of your belief that any of my claims or hypotheses are unshakeable?

[removed]

Javier Mares

You presented a graph of cherry-picked items to fit the narrative of exponentiality. Why are the many hundreds of inventions of the industrial revolution represented as just a single event while the computer and the personal computer comprise two? I imagine it’s because the creators of this chart decided upon the exponentiality narrative before constructing the chart. They are not even charting technology. The same graph shows biological evolution (emergence of reptiles, mammals, primates), culture (art), and technology (wheel, telephone, computer). The set of events is clearly chosen in an ad-hoc manner to fit the desired epochs.

ref: http://www.singularity.com/charts/page17.html

[This is a warning on language. I’m happy to present dissenting views, but not ranting. Please mind the tone]

It has now been more than 6 months since your original post “The AI Misinformation Epidemic” and I have yet to read your in-depth blog posts on the topics “THE INFLUENCER INDUSTRY”, “THE PROPHETS (PROFITS?) OF FUTURISM”, “THE FAILURE OF THE PRESS”, and “THE PERILS OF MISINFORMATION”.

Your well-worded posts seem to point to the idea that AI is not a threat to humanity, and that those who put forward that idea are ignorant plebes who have fallen for a ruse of some sort.

Do in fact think that AI poses no risk to humanity? If so, can you defend this assertion? I would be very interested in reading such a defense (and poking holes in it).

Hi Edwin, sorry to disappoint you with the pace of my progress and hope to relieve that situation soon. Simultaneously getting a faculty position, working at Amazon AI, finishing PhD, and writing a few papers in the interim has turned out to be a bit time consuming. A few points on this. Some of the posts I’d like to write require a serious look at people’s work. I don’t take this likely. I’m not a takedown artist. So for example, for the Influencer Industry (actively researching) I’m reluctant to call anyone a charlatan lightly. Another point is that this was something of a warning shot or missions statement. I won’t likely follow the proposed TOC too strictly. For example, I wrote a post shortly after calling out a fluff piece by the Guardian which grossly overstated the autonomy of a humanoid bot. https://approximatelycorrect.com/2017/04/17/press-failure-guardian-meet-erica/

I’ve also tried to be active helping journalists to combat hype stories like the recent Facebook AI chatbot (that needed to be “shut down”) nonsense.

In the interim, I’ll try to answer your primary question succinctly:

It’s convenient to think either AI research could risky and therefore Nick Bostrom/Elon Musk speak the gospel or alternatively that they’re all idiots and AI poses no risk. The more complex truth is that no one around today has a good grip on how much of a long-term risk AI research poses, or in which ways. I think there are a lot of misinformed people who have disproportionate mindshare relative to their expertise. However, that doesn’t mean that I dismiss the possibility of long-term risks or the reality of more terrestrial intermediate term risks. I state as much in the mission statement for this blog: https://approximatelycorrect.com/2016/10/01/mission-statement/

Zachary, I’ve only recently stumbled into your world. I’m a convert – your lucidity has impressed me.

You concluded this particular article with the question: “What will happen if we decimate the employment markets in countries that reap none of the benefits of such ownership?”

I live in South Africa. I’ve raised funding for tech startups in Silicon Valley, so I get the tech scene. Africa has a concept of “Ubuntu”: connectedness. The Valley appears to me to be focused on efficiency, productivity, profits. Africa (and AI) is focused on understanding, connection, empathy, collaboration – the stuff The Valley doesn’t get.

Most of Africa falls under colonial rule/influence, because of her rich natural resources, which were exploited for over a century. Hence the “agriculture, mining and transportation industries that many developing nations depend on.”

I can’t help wondering whether Africa’s real natural resource is actually “empathy”. AI is exploring the fringes of empathy. Could it be that Africa is not the basket case, but rather the future role model?

My thinking is nascent, yet pregnant with possibility. Would love to engage in a deeper conversation.

I love this idea and would like to explore it further.

Hello Zachary,

I’m extremely interested in the points you raise here, but from the other side. Thought I would jump in on the conversation.

I am a marketing writer and journalist. Much of the work I do is “translating” highly technical subjects into layperson language. As you can imagine (or know firsthand), this in itself opens up the potential for imprecision, if not inaccuracies. For some of my work – the marketing side of my work – I have the luxury of sending my articles to the subjects to review and get their opinions. For the journalism side though, I cannot send for review due to journalistic standards. This means I must be much more careful how I word things and have to have a stronger grasp on the subject. I am not perfect, but I do take the time to try to get it as right as possible.

However, I do understand the issues related to journalists “getting it wrong” and I’d like to address these points a bit more deeply from my own personal experiences.

As you quoted above from Jim, journalists do tend to be generalists. But that’s just part of the problem. These days, they also tend to be young, underpaid (perhaps not paid at all), and with little support. It’s not just writers who have been axed with the decimation of newsrooms – more crucially in my mind, it’s the editors as well. These are the people with the years of experience and knowledge who, in the past, could nurture and mentor writers. Now, many barely have time to review for typos (which is why there has been a huge spike in those, too).

Related to the decimation of newsrooms is the need to become sensationalistic. In the old days, you could afford to be “above” hyperbole. Now, it’s all about grabbing eyeballs in a desperate bid to survive. If you notice now on CNN, for example, everything they report on is “BREAKING NEWS!” even if Wolf Blitzer is talking about something that happened yesterday.

There are other factors at play that make the media the way it is today, but these are the most important ones in terms of the discussion here.

Now, given that media environment, there are several aspects of the AI discussion that make it so ripe for hyperbole in the press:

It’s scary – you might not think so, but movies and books such as “I, Robot” and “Transcendence” provides what the average person perceives to be a realistic future. Our lives have already been intruded in a very real way by cameras and browser cookies and cell phone tracking and sales history tracking and so on – it already seems like there are forms of AI following us. You and I know that this is not the case – not in terms of real AI or, as you and others call it, machine learning (I’ve also heard the term “cognitive computing”). But, for those who don’t understand it – and that’s 99.99% of the world’s population, give or take – the possibility in their minds of AI taking our lives even more seems very real.

Isaac Asimov understood this, I think, and came up with the Three Laws of Robotics (which should probably more precisely be named the Three Laws of AI or the Three Laws of Machine Learning). Now, I suspect that he did this as a literary device rather than a personal belief that AI would need these laws. But he realized the appeal it would have to his readers, and why. I think it’s no surprise then that Asimov was also right about human reaction to AI…

We’ll lose jobs – every major disruptor brings this fear. Usually, it’s right, though not to the dire extent first predicted. The Internet is still disrupting – and not coincidentally, it’s causing the loss of many media jobs. Seems like if a computer can diagnose cancer and draw up a treatment plan, what do we need doctors for? Again, I understand myself that this is not really the case, but it’s something that many people would assume. (A great example – most people have tried “Dr. Google” at some point in their lives, and have probably found firsthand that their own doctor is much more likely to give them the real answers… It will take time for people to see AI as a tool, not an oracle.)

It’s unknown – this is related to the first, but the difference in my mind is that people cannot comprehend AI, just as they could not comprehend the Internet before they started using it. Even the people in AI don’t know with any certainty where this will all lead. As humans have done with comets and the sun blocking the moon, we make up stories to explain why it is happening to fill in for the truth. And, as humans have done, these stories more often than not deal with bad portents instead of good fortune.

Journalists too are trying to fumble their way through the darkness to determine what exactly AI is, and more importantly, what it could be in the future. You can sense it in their news reports – they are exploring the possibilities as they go, simply because they don’t have the answers to report. I think this is a natural reaction, too. (In fact I’ve done it myself…)

It’s the Next Big Thing – smart phones, smart TVs, smart homes… Now, we’re on the verge of something going yet another step toward computer self-sufficiency. Loop that in with chat bots and self-driving cars, and we see ourselves on the edge of another huge disrupting technology. Whether the consequences are good or bad, isn’t that just plain exciting? Media is always happy to talk about the Next Best Thing.

“Artificial Intelligence” and “AI” is much sexier and catchier than “Machine Learning” – if you truly want to stop people using the term “AI” because of its implications, you’ll have to come up with something better than “Machine Learning”. With all due respect, it’s not going to catch on.

One of the problems I have with “global warming” is that it’s inaccurate – you get a winter blast in Washington, DC in April, and people say, “Global warming, my ass!” Climate change is a little more precise, but not quite as catchy, so we’re stuck with the former.

Similarly, I think we are stuck with the term “AI” no matter how imprecise it is. I would suggest that the only way for scientists to combat the imprecise – and, as some have suggested, dangerous and irresponsible – use of the word is to take it back, and redefine what it means. Right now is the best time, because nobody does know what it means.

___

I’m not trying to make apologies for the media. I’m not happy with the state it’s in either. Unfortunately, in this economic and technological climate (and, in the United States, the political climate where private corporations for the most part control the media), that state will not likely change. This is why I wanted to jump in on this conversation: the media won’t change anytime soon, so if your goal is to provide good information to the media, you’ll have to educate them rather than try to change the system. A huge task – I tip my hat off to you and wish you lots of luck. In my experience, you’ll likely influence some, maybe even many in the media. This blog is a great step in that direction. But even so, it will be very difficult to get rid of all the misconceptions floating out there now and in the future.

In any case, this comment is getting to be longer than your post, so I’ll leave it there. Very interesting argument – I’ll be following along as you go.

~Graham