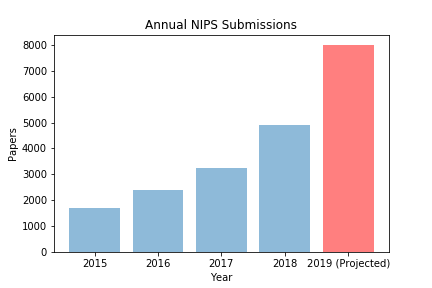

With paper submissions rocketing and the pool of experienced researchers stagnant, machine learning conferences, backs to the wall, have made the inevitable choice to inflate the ranks of peer reviewers, in the hopes that a fortified pool might handle the onslaught.

With nearly every professor and senior grad student already reviewing at capacity, conference organizers have gotten creative, finding reviewers in unlikely places. Reached for comment, ICLR’s program chairs declined to reveal their strategy for scouting out untapped reviewing talent, indicating that these trade secrets might be exploited by rivals NeurIPS and ICML. Fortunately, on condition of anonymity, several (less senior) ICLR officials agreed to discuss a few unusual sources they’ve tapped:

- All of /r/machinelearning

- Twitter users who follow @ylecun

- Holders of registered .ai & .ml domains

- Commenters from ML articles posted to Hacker News

- YouTube commenters on Siraj Raval deep learning rap videos

- Employees of entities registered as owners of .ai & .ml domains

- Everyone camped within 4° of Andrej Karpathy at Burning Man

- GitHub handles forking TensorFlow, Pytorch, or MXNet in last 6 mos.

- A joint venture with Udacity to make reviewing for ICLR a course project for their Intro to Deep Learning class

With so many new referees, perhaps it’s not surprising to see, sprinkled among the stronger, more traditional reviews, a number of unusual ones: some oddly short, some oddly … net-speak (“imho, srs paper 4 real”), and some that challenge assumptions about what shared knowledge is pre-requisite for membership in this community (“who are you to say this matrix is degenerate?”) .

However, these reviews, which to a casual onlooker might signify incompetence, belie the earnest efforts of a cadre of new reviewers to rise to the occasion. I know this because, fortuitously, many of these new reviewers are avid readers of Approximately Correct, and for the last few weeks my email box has been overflowing with earnest questions from well-meaning neophytes.

If you’ve ever taught a course before, it might not come as a surprise that their questions overlapped substantially. So while we don’t normally post QA-type articles on Approximately Correct, an exception here seems both appropriate and efficient. I’ve compiled a few exemplar questions and provided succinct answers here in a rare QA piece that we’ll call “Is This a Paper Review”?

Henry in Pasadena writes:

Dear Approximately Correct,

I was assigned to review a paper. I read the abstract and formed an opinion tangentially related to the topic of the paper. Then I wrote a paragraph partly expressing my opinion and partly arguing with one of the anonymous commenters on an unrelated topic. This is standard practice on Hacker News, where I have earned over 2000 upvotes for similar reviews, which together form the basis of my qualifications to review for ICLR. Is this a paper review?

AC: No, that is not a paper review.

Pandit in Mysore writes:

Dear Approximately Correct,

I began to read a paper about the convergence of gradient descent. Once they said “limit” I got lost, so I skipped to the back of the paper, where I noticed that they did not do any experiments on ImageNet. I wrote a one-line review. The title said “Not an expert but WTF?” and the body said “No experiments on ImageNet?” Is this a paper review?

AC: No, that is not a paper review.

Xiao in Shanghai writes:

Dear Approximately Correct,

I trained an LSTM on past ICLR reviews. Then I ran it with the softmax temperature set to .01. The output was “Not novel [EOS].” I entered this in OpenReview. Is this a paper review?

AC: No, that is not a paper review.

Jim in Boulder writes:

Dear Approximately Correct,

When reviewing this paper, I noticed that it was vaguely similar in certain ways to an idea that I had in 1987. While I like the idea (as you might imagine), I assigned the paper a middling score, with one half of the review a solid discussion of the technical work and the other half devoted exclusively to enumerating my own papers and demanding that the author cite them. Is this a paper review?

AC: This sounds like a problematic paper review. But it could be a good review if you increase your score to what you think it might be were you dispassionate, tone down the stuff about your own papers, and send a thoughtful note to the metareviewer indicating a minor conflict of interest.

Rachel in New Jersey writes:

I read the paper. In the first 2 pages, there were 10 mathematical mistakes, including some that made the entire contribution of the paper obviously wrong. I stopped reading to conserve my time and wrote a short one-paragraph review that indicated the mistakes and said “not suitable for publication at ICLR.” Is this a paper review?

AC: While ordinarily so short a review might not be appropriate, this is a clear exception. Excellent review!

This piece was loosely inspired by Jason Feifer’s Is This a Selfie?

Love this Zach! You always nail the border between satirical and impactful. Although I have to disagree with your #5 bullet point. Siraj Raval has an amazing channel and does good work, but the first AI rap was over a year before Siraj’s videos. We did this! You can find it here: https://www.youtube.com/watch?v=Js0HYmH31ko Maybe your list should have been 10 bullet points? 🙂

Very worthwhile article. More of us who have been doing this for a while need to involve our students and mentees in the process. Pity the poor reviewer with no experience upon which to reply beyond a collection of rejections!

1) It is the editors job to screen out obvious junk and reject it without getting a report. Are the editors not doing this or are the papers being submitted largely not junk? Or maybe its hard to tell if a paper is junk.

2) Grad Students can of course be referees. Undergrads … maybe.

3) As a grad student my advisor asked me to referee two papers for STOC. I was honored! He gave me one good one and one bad one. I think he wanted to know if I could tell the difference. I could!

4) I no longer an honored to be asked to referee a paper.