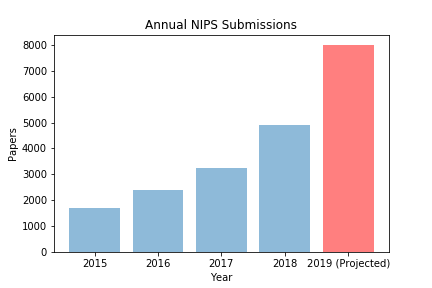

With paper submissions rocketing and the pool of experienced researchers stagnant, machine learning conferences, backs to the wall, have made the inevitable choice to inflate the ranks of peer reviewers, in the hopes that a fortified pool might handle the onslaught.

With nearly every professor and senior grad student already reviewing at capacity, conference organizers have gotten creative, finding reviewers in unlikely places. Reached for comment, ICLR’s program chairs declined to reveal their strategy for scouting out untapped reviewing talent, indicating that these trade secrets might be exploited by rivals NeurIPS and ICML. Fortunately, on condition of anonymity, several (less senior) ICLR officials agreed to discuss a few unusual sources they’ve tapped:

- All of /r/machinelearning

- Twitter users who follow @ylecun

- Holders of registered .ai & .ml domains

- Commenters from ML articles posted to Hacker News

- YouTube commenters on Siraj Raval deep learning rap videos

- Employees of entities registered as owners of .ai & .ml domains

- Everyone camped within 4° of Andrej Karpathy at Burning Man

- GitHub handles forking TensorFlow, Pytorch, or MXNet in last 6 mos.

- A joint venture with Udacity to make reviewing for ICLR a course project for their Intro to Deep Learning class