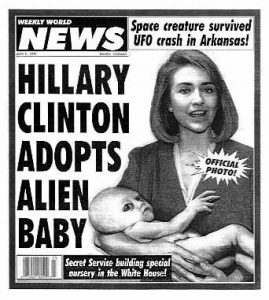

On Friday, Donald J. Trump was sworn in as the 45th president of the United States. The inauguration followed a bruising primary and general election, in which social media played an unprecedented role. In particular, the proliferation of fake news emerged as a dominant storyline. Throughout the campaign, explicitly false stories circulated through the internet’s echo chambers. Some fake stories originated as rumors, others were created for profit and monetized with click-based advertisements, and according to US Director of National Intelligence James Clapper, many fake news were orchestrated by the Russian government with the intention of influencing the results. While it is not possible to observe the counterfactual, many believe that the election’s outcome hinged on the influence of these stories.

For context, consider one illustrative case as described by the New York Times. On November 9th, 35-year old marketer Erik Tucker tweeted a picture of several buses, claiming that they were transporting paid protesters to demonstrate against Trump. The post quickly went viral, receiving over 16,000 shares on Twitter and 350,000 shares on Facebook. Trump and his surrogates joined in, promoting the story through social media. Tucker’s claim turned out to be a fabrication. Nevertheless, it likely reached millions of people, more than many conventional news stories.

A number of critics cast blame on technology companies like Facebook, Twitter, and Google, suggesting that they have a responsibility to address the fake news epidemic because their algorithms influence who sees which stories. Some linked the fake news phenomenon to the idea that personalized search results and news feeds create a filter bubble, a dynamic in which readers only encounter stories that they are likely to click on, comment on, or like. As a consequence, readers might only encounter stories that confirm pre-existing beliefs.

Facebook, in particular, has been strongly criticized for their trending news widget, which operated (at the time) without human intervention, giving viral items a spotlight, however defamatory or false. In September, Facebook’s trending news box promoted a story titled ‘Michele Obama was born a man’. Some have wondered why Facebook, despite its massive investment in artificial intelligence (machine learning), hasn’t developed an automated solution to the problem.